This is a repost from: https://labs.spotify.com/2016/02/22/things-we-learned-creating-technology-career-steps/

This is part three of a three-part series on how we created a career path framework for the individual contributors at Spotify. Part one discussed the process we used to formulate the framework. Part two contained version 1.0 of our framework. In this segment, I’ll talk about the lessons we learned rolling out the framework to the technology organization. If you haven’t read the first two parts, I suggest that you read those first before proceeding with this one.

The Launch to the Organization

We launched the request for comments (RFC) version of Career Steps at a special town hall for Spotify’s entire technology department in December, 2014. Leading up to the town hall we’d done several reviews of earlier iterations with increasingly larger groups from the organization, and we’d given trainings in Steps and conversations around Steps for every manager in Technology. In the presentation, we left plenty of time for questions, which was good. We prompted people to read the document, ask questions in the document, via a mailing list or a slack channel that we set up, or to ask their manager. In retrospect, we should have followed up with some more opportunities to talk to our working group face-to-face. Most of the organization would have been reading the document for the first time after the town hall, and some had only read earlier drafts.

The Relationship between Steps and Compensation

There was one area that was still incomplete when we launched Steps, and this turned out to be an issue that challenged us for a long time. This was the connection between your step and your salary. We had asserted that there was a connection, but at the point that we launched the framework, our Compensation and Benefits team had not finished their review of how this should work. We knew the generalities, but not the details. This left us in a very challenging situation, since we had given Steps a critical connection to the individuals in the organization, but we couldn’t yet explain it. We knew that there needed to be a connection between Steps and compensation. If we were saying that Steps embodied what we valued from the members of our organization, but the salary of those members was determined in a completely separate way; we were essentially contradicting ourselves and undercutting the effectiveness of the framework.

The lack of clarity around compensation created some tension in the adoption of Steps with some individuals in the organization. Unfortunately, the connection between salary and step wasn’t resolved until nearly a year later. This meant that the first salary review after launching Steps wasn’t able to use the framework. On one level this was a failure, but in some ways this was also positive. Since Steps were very new, having people get a step and then immediately have it affect their salary might have lead to a lot bad feelings. By delaying the tie to compensation people had time to adjust to the system and potentially change their step before their first salary review.

Our inability to talk concretely about Steps and salary also created misperceptions around how this would work. We had to spend a lot of effort working through these only to have them re-appear in mail threads and discussions again and again. The most persistent misperception was that the only way to change your salary was by changing your step. This naturally caused much concern that was difficult to dispel until the Compensation and Benefits team had completed their work. Our C&B team did a stellar job on creating a fair and very progressive system that allowed good overlap in salary ranges between the steps and a lot of headroom. Since that has been completed, we hopefully have put many of the concerns to rest; especially as we now using Steps as part of our current salary review.

Behavior Versus Achievements

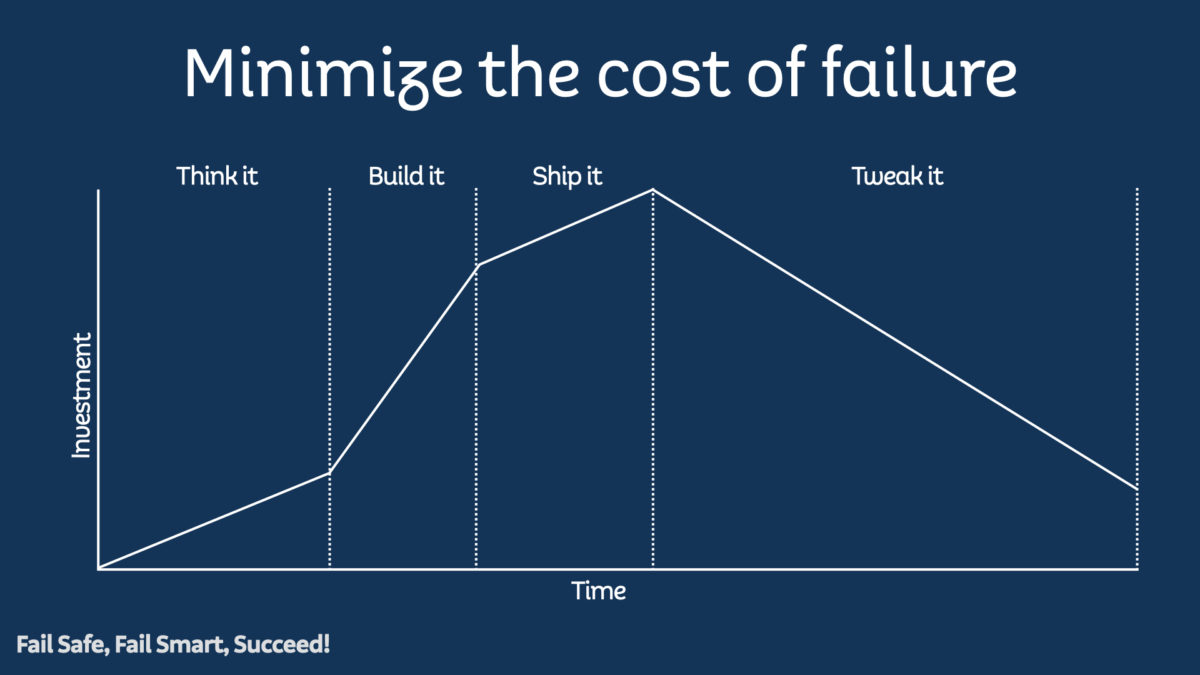

We made an explicit decision around Career Steps that career growth was characterized by your behaviors and not by your achievements. This is counter to the way career advancement works at many other companies. It was something that we not only felt strongly about; it was something that we were proud of. In a culture that encourages innovation, failure (and learning from failure) is a natural occurrence. If we only rewarded success, then we were consequently punishing failure and discouraging risk-taking. We also wanted to encourage real personal growth, and not a culture of checking off achievements on a list and expecting a promotion. This naturally created some ambiguity around the requirements for each step. The working group embraced this ambiguity as a way of giving managers some room to make decisions and also to encourage discussion between the individual and their manager.

In retrospect, our approach was a bit naive in a few ways. While we as an organization are very comfortable with ambiguity in many areas, this was something that was personal, and had personal consequences. Some individuals were very uncomfortable with this ambiguity, and would have preferred more concrete requirements around advancement. There was another group that thought even the examples given were too much of a checklist. This is still something that we are working through.

We are looking for ways to support those in the organization who would like more clarity without being too prescriptive. We have discussed creating lists of (anonymized) examples of the behaviors. The concern is that this would lead to people treating the examples as achievements to be checked off instead.

This has been a particularly thorny path to traverse with some very vocal minorities, but the majority of the organization seems to have understood the concept.

Having Greater Impact Means Not Working on a Team?

There was one aspect of the Steps Framework that we hadn’t anticipated being controversial, and didn’t come up often in our earlier reviews of the document. This was the aspect of Steps being a reflection of your sphere of influence. The core idea around this was that as you increase your professional maturity, you naturally will become a resource for ever larger parts of the organization; either through your technical leadership, your deep technical skills, or your general problem solving ability. This increasing sphere of influence brings new, broader, responsibilities with it. This was well aligned with our own personal experiences in the working group at Spotify and other companies. For some individuals, the idea that they couldn’t move to a Tribe/Guild step without working outside their squads was a concern.

At Spotify, we point to the squad as the central place that work gets done, it is the top of our inverted servant-leadership hierarchy. The question we heard repeatedly was: why couldn’t someone just be better at what they do and get “promoted” for doing the exact same role if they were adding value to their team? I think that this was a case where the we could have presented the reasoning of this decision in a better way in the document.

Not Enough Chances For Advancement?

When deciding on the number of steps to create, we had decided to keep the number relatively small. The thought being that it was easier to add more than it was to remove some. Four steps is not many changes in a potentially forty-plus year career. The expectation was that people would potentially stay at a step for a very long time. This turned out to be very discouraging for some people who felt that they would never be “promoted” in their Spotify career.

Here we missed the understanding that a segment of our organization had been wanting the opportunity for recognition on increasing advancement. We had not been doing this before in any respect, so we hadn’t considered that it was something that a group really felt the absence of. It turns out that we were wrong about that and with Steps we weren’t giving that group enough opportunity to get it. This was a big oversight since we knew that part of what had been encouraging people to make career changes to management or product was this lack of recognition. This is an active area of discussion for next iterations of the framework.

Assigning Steps

After the town hall presentation introducing Steps, we gave people some time to respond to the RFC version of the document and feedback to the working group. In January, we started the process of making sure every individual contributor in the technology organization had a step. Initially, we left the process up to each tribe. This was a definite mistake. The tribes needed some more guidance on a good way to make this process work. Luckily, at Spotify, we are quite good at figuring these things out and sharing good ideas. The Infrastructure and Operations Tribe came up with the suggestion: each individual should use the Steps document to make a self-assessment of which step they should be on, then have a discussion with their manager about where they agreed and disagreed. This was quickly adopted as a good process across most of the organization.

Once these discussions were completed in each tribe, the tribe would meet to make sure that each manager was assigning steps to their employees in a similar way. Additionally, functional managers in different parts of the organization also met to do a similar exercise. These synchronizations helped make sure that we were being fair and consistent across the entire organization. Before the steps for individuals were finalized, the Tribe Leads and CTO met to go over all the recommendations for the Tribe/Guild and Tech/Company steps. This ended up serving two very valuable purposes: it assured consistency across the entire organization for the individuals who were on these steps, and it made the senior technical leadership aware of who these individuals were and what their manager thought they contributed to the organization.

The process of assigning steps to individuals completed in March, but here we also had a problem. The effort of adding steps into our HR systems ended up being a bigger challenge than our HRIS team had anticipated. So, the steps were assigned in the spring, but weren’t easily visible to employees, their managers or HR until the fall. This also meant we had difficulties tracking which teams were behind in submitting steps for their employees. We couldn’t easily run reports to see how steps were distributed as well.

Once this was finally worked out, we found that we were missing a lot of data. Some employees had never been assigned a step. Some employees had transitioned between teams or roles and their step hadn’t been communicated. Steps hadn’t been integrated into the onboarding process, so many new employees had never gotten their step. There were a bunch of other issues as well. All of these had to be rectified before we could do our salary review. The lack of visibility also contributed to an “out of sight, out of mind” issue where some people or managers didn’t do much with the framework after the initial discussion.

In retrospect, the working group should have taken ownership of this until the issues with the HR system were handled. Unfortunately, there was some poor communication around the timeline for the fixes and we were always thinking that the issue was nearly solved.

Positive Results

Once the Steps Framework was launched, we started getting strong positive feedback from the organization in addition to the concerns noted above. Many of our line managers (Chapter Leads) were previously individual contributors; they especially appreciated having a structure to help frame personal development discussions. Also many individuals told us how it was good to have some structure and understanding about how to grow at Spotify. The working group collected feedback in multiple ways including doing interviews with employees in different offices.

We had wanted to have some real data in addition to our anecdotal evidence to have greater confidence we were actually adding value. Some strong supporting data came from our yearly Great Place to Work survey, where in the Technology Organization, the “Management makes its expectations clear” measurement increased by 4 percentage points year over year, and the “I am offered training or development to further myself professionally” measurement increased by 6 percentage points. There were several things that could have impacted these measurements, but we had reasonable belief that Steps had a part in these increases.

While we had started our effort with the desire of being data-driven, as we went to measure our impact it was clear that we were not as rigorous as we should have been. We should have started the entire effort with some qualitative numbers on how the organization felt about the support they had for personal development driven by a survey or poll. This would have given us better metrics to measure ourselves against, and would help us to guide future iterations as well. This is something that the working group is actively pursuing.

Loss of Focus

Once the steps had been assigned, the working group started moving into a less active phase. There was a strong sense of accomplishment, but also weariness. From meeting twice a week and working on the document in between the meetings, meeting with individuals and teams to discuss steps, training the managers, launching the effort and supporting the initial rollout, there had been a tremendous amount of work done. However, this wasn’t anyone’s main job; we were all moonlighting to do this. Also during this time many of us were involved in a large project that also was demanding our focus. It felt like we had completed our mission and that it was time to move onto new things. Unfortunately, we were wrong.

In this post-launch phase is where we made some of our biggest mistakes. We’d launched the framework, but our support for it was largely put on hold. We hadn’t put the necessary structures in place to make sure that new employees were being trained in Steps. We stopped sending updates and communicating about what was happening around Steps. This lead to a lot of questions and confusion in the organization around what was going on with the effort.

Eventually, we realized our error. At that point we had to do some cleanup and rebuilding work to get the program back on track. Luckily during this time, the HRIS team had done their thing, as had Comp and Benefits. We also had our first Steps-enabled salary review to do. We were also very fortunate that parts of the organization had really started to embrace Steps and were using it in several ways to support individual growth through structured trainings and formal or informal mentoring. Having these efforts emerge helped keep Steps from completely being forgotten in the technology team.

The working group had been inactive too long at this point. Most of the members had new commitments. For a time we continued moving the effort forward with the participation we had, but it was clear that we needed to move into a new phase, which would require a new group.

Current Status

Currently, the Working Group is reforming with new members. The current members are engaged with our Human Resources team on training in Steps for Managers and Employees. We’re also starting working on the next iteration of Steps addressing some of the lessons learned and feedback now that Technology has been living with the framework for a while. The new group will have a few members from the first version, which will give some continuity to the effort and provide some history, but the rest of the group will be new, which should inject some new ideas and approaches to the work.

The leadership of the technology organization has been doing a lot to support the framework. We are actively giving more responsibility and opportunities to our Tribe/Guild step employees and we are also looking to grow our Squad/Chapter step employees to the next step if that is what they are interested in.

We’ve had several promotions between steps during the year; which is a serious measure of validation. If people weren’t moving between steps that would be a major issue.

Additionally, in my own organization, two managers who were also extremely skilled technologists moved back to being individual contributors. They were excited by the possibility of being able to be technical leaders without being people managers.

Many tribes are now starting to consider their Tribe/Guild step people as incremental members of their squads so that when they are helping outside their squad they don’t adversely affect the ability for the squad to get its work done.

Lessons

We learned many lessons in the creation of Steps. If there was one thing that I think was paramount, it was that this ended up being a lot more like Culture Change than we expected it would be. Our view was that we were creating something that would support and reinforce our company culture. In fact, we were specifying something where there had been only people’s own conceptions before. So, while we feel that we did create something generally well aligned and supportive of the Spotify culture, it was still a change for many individuals in the organization. If we had realized that, we would have treated the rollout much more like a Culture Change effort, and used more of those techniques in our rollout plans.

Another major lesson that I didn’t capture above was that we didn’t really have our long term support plan in place. We did put together a plan around supporting the effort, but that plan was created after the launch of Steps. As I mentioned above, the working group was pretty tired from the effort of getting the framework to that point. It would have been much better to think through the long term support needs of the work closer to the beginning of the effort and then adjust them later as we learned more.

I think that the process of using increasingly large groups to give feedback was quite good. It definitely resulted in a much better result than if we had just generated the framework in a smaller, more isolated, group. We primarily used the managers and leadership of the organization to collect feedback though. We would have benefited from including groups of individual contributors as well, especially if we could include the same people in multiple reviews of the document, just as we did with the coaches and leadership.

There was something that I neglected to mention in the first post that I thought was quite valuable. Once the Steps Framework was starting to solidify, we did a “simulation” to see what a potential distribution of steps was in the organization might be once we rolled it out. The committee had what we thought was an ideal distribution based on Spotify’s hiring strategy and what would make sense for an organization of our size. We asked each manager to estimate (in a non-binding way) which step each of their employees was on. We then combined these to get a master histogram of what the distribution might be. Luckily for us, we got a distribution fairly close to what we were hoping for. So, it was a good validation of the Framework at that point. This also had the effect of making each manager have to apply the Framework in a concrete way, which also generated more good feedback.

In addition to the lessons in the rest of this document, I want to reinforce the issues we encountered by underestimating the efforts required from Compensation and Benefits as well as our back-office systems to support this work. While HR was involved from the onset, we didn’t really take into account the timelines for the structural support to make the whole effort work. We should have involved those groups from the beginning, which would have avoided difficulties later.

Conclusions

In the process of leading the effort around career pathing in the technology organization at Spotify, I learned a tremendous amount. While I obviously spent more time thinking about how to motivate and incentivize employees than I ever had before, I also learned a lot more about my company and my coworkers than I had expected to. I was also reminded clearly how different my path had been than any of my fellow members of Technology. Things that I had learned experientially, I had to put reasoning and explanation behind and that was incredibly valuable. Many of our employees had never been at a company with career path support at all before. Those with experience at other companies that had career path frameworks, had not seen anything like the working group had created. As I talked with people individually and in small groups, I found better and better ways to articulate the reasoning behind the things that had just seemed obvious to us as we wrote the document. This helped us improve the document immeasurably and hopefully also has helped clarify the things that I’ve written about in these posts.

The effort of creating this framework also raised some issues in the organization that we’ll need to address as a group. The culture of Spotify is characterized by the Swedish word “lagom.” We try to see each other always as equals. We encourage people to challenge their leaders if they don’t think they are right. As is reflected in the Steps document, we put higher value on strong teams than on strong individuals. We also imbue our teams and our individuals with autonomy in how they do their work. We tried to incorporate all of these ideas in our Career Steps. When people in the organization wish for more recognition and status to be reflected in our career pathing, is this counter to our culture, or is this indicative of the culture changing? What is the role of career pathing in enforcing a desired culture rather than supporting it? These are questions we’ll continue to look at as we evolve Career Steps.

Spotify is a unique company, with a singular culture. The specifics of what we created and the lessons that we learned may or may not apply to your company. In aggregate, hopefully you will find our experiences and learnings valuable as you think through how you want to do similar programs at your own company.