Apportioning Monoliths: my talk from Minsk

tweet: My talk from Minsk: Apportioning Monoliths – http:…

tweet: gmail really needs a multi-select delete/no mechan…

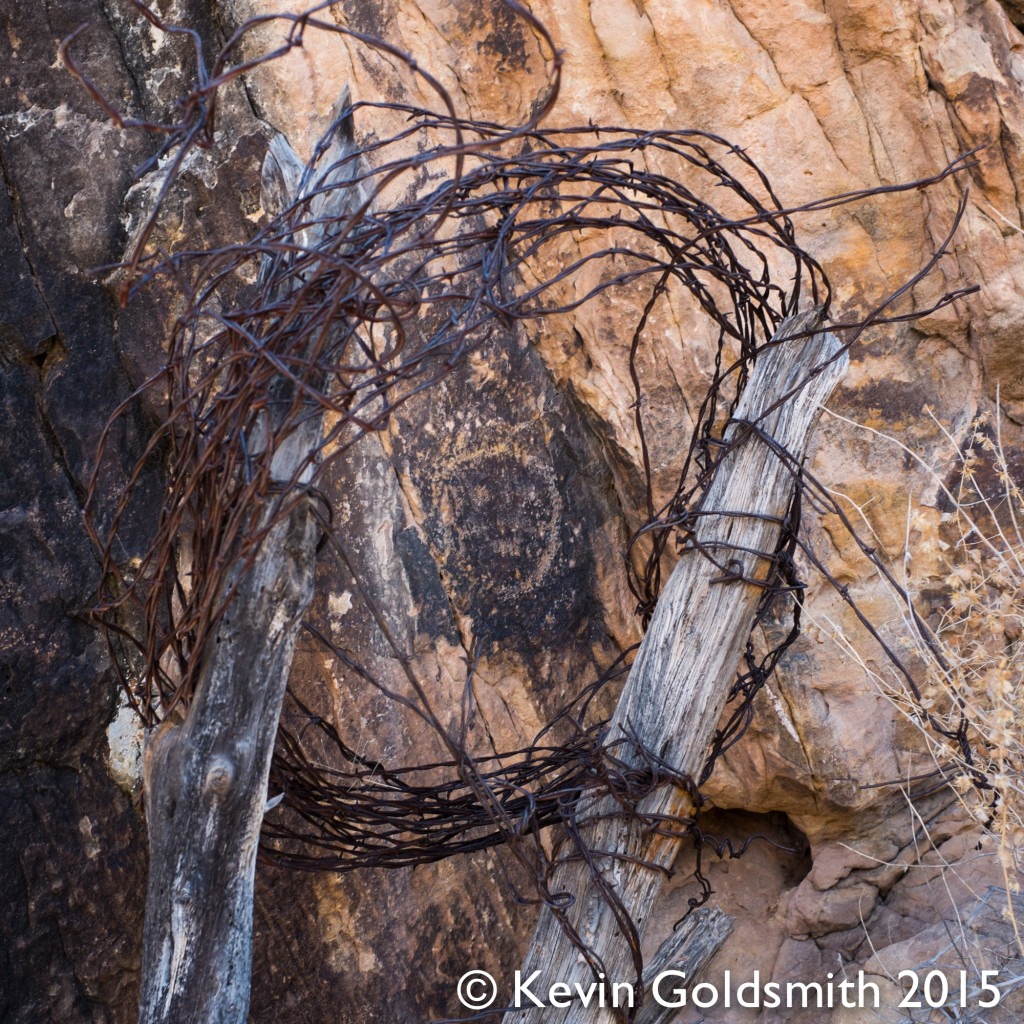

New Mexico

tweet: Met with some fine folks from the US Navy today, a…

Met with some fine folks from the US Navy today, and got my first challenge coin! instagram.com/p/0_DF_5w0OY/